Old algebra helps build new algorithms

Nisheeth Vishnoi is a theorist at Microsoft Research who has many pretty results. Some of them require great technical expertise—the main idea may be clear, but the execution is quite difficult. Others of his results require less technical expertise, but their proofs depend on a flash of deep insight. It is rare, in my experience, to find this combination in one person.

Today I would like to talk about his recent talk at GIT on finding a balanced separator in an undirected graph. It was a beautiful talk that explained a proof of the former kind: clear ideas but very technical details.

This work is joint with Lorenzo Orecchia and Sushant Sachdeva and will appear in the next STOC—the paper is entitled “Approximating the Exponential, the Lanczos Method and an  -Time Spectral Algorithm for Balanced Separator.”

-Time Spectral Algorithm for Balanced Separator.”

One thing I really appreciate is that although the algorithm works on graphs and gives you a graph decomposition, the key ingredients are numerical and algebraic. A 37-year old result on polynomial approximations is combined with matrix algebra. As soon as you hear “matrix” you might think that algorithms of time  are involved, where

are involved, where  is the exponent of matrix multiplication, but no, the time stays strictly less even when the

is the exponent of matrix multiplication, but no, the time stays strictly less even when the  -vertex graphs have

-vertex graphs have  edges.

edges.

Advisee Disclaimer

Before I start discussing this work (OSV) I must disclose that I was once Nisheeth’s Ph.D. advisor—perhaps you are always your students’ Ph.D. advisor, even after they graduate. But I do not really know where he got the tremendous ability that he demonstrates now, since I cannot imagine that it came from my advising. He was great as a student, great as a colleague when at Tech, yet now he seems to operate at a higher level. Ah, well.

This phenomenon of students being better than their advisors has happened to me many times. It reminds me of a question that Ken Steiglitz used to ask me years ago. He wanted to know how we could make objects to extremely small tolerances when as humans we could only see fairly large differences? Another way to ask this is:

With tools that operate only to within a tolerance of  how can we make objects that have tolerances of

how can we make objects that have tolerances of  , where

, where  ?

?

Let’s leave that discussion for another day and move on to the result of OSV.

Cutting Graphs

Undirected graphs ares used to model countless things in computer science; if they did not already exist, we would have had to invent them. Hence finding algorithms that decompose graphs efficiently is extremely important to theory in general, and to creating graph algorithms in particular. Decompositions can be used to create recursive algorithms and to solve many other problems on graphs.

One of the reasons that binary trees are often an accessible class of graphs is that they satisfy the following beautiful result:

Theorem: Let  be a binary tree on

be a binary tree on  vertices. Then there is an edge

vertices. Then there is an edge  whose removal cuts the tree

whose removal cuts the tree  into two pieces, and each is of size at least

into two pieces, and each is of size at least  . Moreover the edge

. Moreover the edge  can be found in linear time.

can be found in linear time.

(source, S’09 final)

There are three features to this theorem that make it useful:

- The cut is small: it consists of a single edge.

- The two pieces are balanced: each is at least half the size of the other.

- The cut can be found in linear time.

I would love to report that this theorem on binary trees generalized to all graphs, or even to all bounded degree graphs. Of course this is false. There are bounded-degree graphs such that all balanced cuts have size  . This follows even if we weaken the notion of balanced to allow a piece to be only a

. This follows even if we weaken the notion of balanced to allow a piece to be only a  fraction of the other, and even if we do not require that there is a polynomial time algorithm to find the cut.

fraction of the other, and even if we do not require that there is a polynomial time algorithm to find the cut.

There is a property called conductance of a graph, usually denoted by  , that measures how well the graph can be cut into two pieces. The main result of OSV is a new theorem that settles the question of designing asymptotically optimal algorithms for finding balanced cuts.

, that measures how well the graph can be cut into two pieces. The main result of OSV is a new theorem that settles the question of designing asymptotically optimal algorithms for finding balanced cuts.

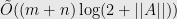

Theorem: There is a  -time algorithm that given an undirected graph

-time algorithm that given an undirected graph  , a constant balance

, a constant balance ![{b \in (0,1/2]} {b \in (0,1/2]}](https://s-ssl.wordpress.com/latex.php?latex=%7Bb+%5Cin+%280%2C1%2F2%5D%7D&bg=e8e8e8&fg=000000&s=0) , and a parameter

, and a parameter  , either finds an

, either finds an  -balanced cut of conductance

-balanced cut of conductance  in

in  , or outputs a certificate that all

, or outputs a certificate that all  -balanced cuts in

-balanced cuts in  have conductance at least

have conductance at least  .

.

The theorem is about as good as one can expect. The cut is balanced and its size is controlled by the conductance as it must be. Also the algorithm runs in  time.

time.

Almost Linear

In this paper and elsewhere you will see the phrase “almost linear,” and it is denoted by adding a tilde to the “O:” as in  . This means that the algorithm in question runs in time

. This means that the algorithm in question runs in time  where

where  is a product of terms that depend only logarithmically on

is a product of terms that depend only logarithmically on  and all other parameters. We would prefer a truly linear time algorithm, but given the state of our understanding, often almost linear is the best we can do. So we do what we can.

and all other parameters. We would prefer a truly linear time algorithm, but given the state of our understanding, often almost linear is the best we can do. So we do what we can.

An Old Theorem

OSV’s paper depends on a rather surprising result by Edward Saff, Arnold Schönhage, and Richard Varga (SSV), which proves the existence of a very good rational approximation to the function  on the entire interval

on the entire interval  . As given in Corollary 6.9 on page 35 of OSV, SSV proved in 1975:

. As given in Corollary 6.9 on page 35 of OSV, SSV proved in 1975:

Theorem: For any integer  , there exists a degree

, there exists a degree  polynomial

polynomial  such that

such that  approximates

approximates  up to an error of

up to an error of  over the interval

over the interval  .

.

Note, the theorem proves only the existence of this good approximation, and this is one of the issues that OSV must overcome.

The reason that having a good rational approximation is important is that OSV uses this on matrices: this allows a certain linear algebra problem to be solved very fast. We will now turn and discuss this theorem.

A New Theorem

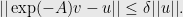

In order to prove their theorem, OSV must be able to compute an exponential of a matrix fast. They do this by using SSV and quite a number of other tricks. The main result is:

Theorem: Given an  SDD matrix

SDD matrix  , a vector

, a vector  , and a parameter

, and a parameter  , we can compute a vector

, we can compute a vector  such that

such that

Further the algorithm runs in time  .

.

Here SDD means that the matrix is symmetric and diagonally dominant. They use the beautiful work of Daniel Spielman and Shang-Hua Teng on approximately inverting matrices. One of the very technical details is the error analysis, since the approximation to the inverses they get from Spielman-Teng interact in a complex way with the error bounds of SSV. As usual read the paper for details.

Open Problems

Can the result of SSV be used elsewhere? Are all linear algebra problems almost linear?

[fixed OSV theorem statement, fixed vertex v --> edge e in tree lemma statement, and sourced lemma]

Like this:

Be the first to like this post.